AI is everywhere now. But seeing it used well, in ways that make real work faster, clearer, or smarter, is still rare. Most teams are still figuring out what that actually means and how to even talk about it.

A familiar pattern seems to repeat: leadership wants to "do something with AI," teams experiment with ChatGPT or add an AI plug-in to an existing tool, and six months later, nothing meaningful has changed.

It’s not because people don’t care or aren’t trying. It’s because the conversations themselves aren’t specific enough. "AI" means a dozen different things to different people, from writing assistants to data summaries to full automation. Without clarity on what’s actually being built, where it lives in your existing systems, and how it will fit into existing systems, the effort never turns into something operational.

This article is about how to make the shift from just talking about AI to deploying it regularly in new places that generate genuine impact and improvements. By the end, you'll be able to steer your conversations straight to the specifics of effective AI implementation that genuinely improves how teams work.

This guide will help you understand how to:

- Define what problem AI is solving

- Deploy AI where work actually happens

- Design AI to augment, not replace

- Integrate AI with your organization’s data

- Build AI systems that last

See how practical AI can work

Try an AI resume screenerDefine what problem AI is solving

Start small, stay specific, and build from what’s real.

Many AI projects stall before they start because no one has clearly defined the problem. “AI” becomes the solution to everything, and therefore to nothing.

Think like a developer. Before writing code, good developers define what they’re making, who it’s for, where the data lives, and what success looks like. Yet it’s easy for teams to just purchase the latest tool to use AI at work without ever getting that specific.

You don’t need a full spec sheet. But before looking at different AI models or tools, anchor the work in a single real problem. Get targeted. Get small. Get specific. Focus on one task, one dataset, one measurable outcome. Then look for a tool that solves that specific problem.

When you guide these conversations with your team or leadership, keep grounding the conversation in the specifics:

- What’s the task?

- Who owns it today?

- Where does this issue or improvement live in your system?

- What slows it down?

And if you want to get more specific:

- Where is the data, and who are the users of it?

- How often does this process run, and what triggers it?

- What decisions or outputs depend on it being done well?

- Which tools or systems already touch this workflow?

- What happens when it fails or gets delayed?

- How do you know it’s complete or correct?

Once those answers are clear, the path forward almost draws itself. AI starts to make sense when it’s serving real work. It starts to feel better too, as people see small, concrete improvements and finally feel progress they can trust.

Deploy AI where work actually happens

AI works best when it lives where the work lives.

Right now, lots of teams add AI somewhere adjacent to real work, like a chatbot window, a Chrome plug-in, or a quick prototype of a tool built outside their main systems. It can look innovative, but the work itself doesn’t really change. AI ends up orbiting the business, often adding another layer of copy-pasting or context-switching rather than making the workflow smoother.

This happens for understandable reasons. Off-the-shelf tools are pushing AI features everywhere, and it’s easier to spin up an experiment there than to integrate something new into a core system. Teams are under pressure to show progress fast, so they reach for what’s visible instead of what’s embedded.

The real shift is moving from disconnected AI experiments to embedded intelligence that lives beside your data and inside real workflows. That’s where the leverage is. When targeted, practical AI works in the same systems people already use, then small improvements ripple outward with less double work, faster feedback, better insight, and steadier flow.

To get there, teams have to think like builders, not consumers. Just adding another tool or interface isn't enough. Effective AI adoption happens when you wire intelligence into the workflows and data people already use daily.

The best way to begin is small and practical. Pick one workflow, one dataset, one visible problem. Once a single feature works inside an existing process, momentum builds quickly. People see it, trust it, and ask for more.

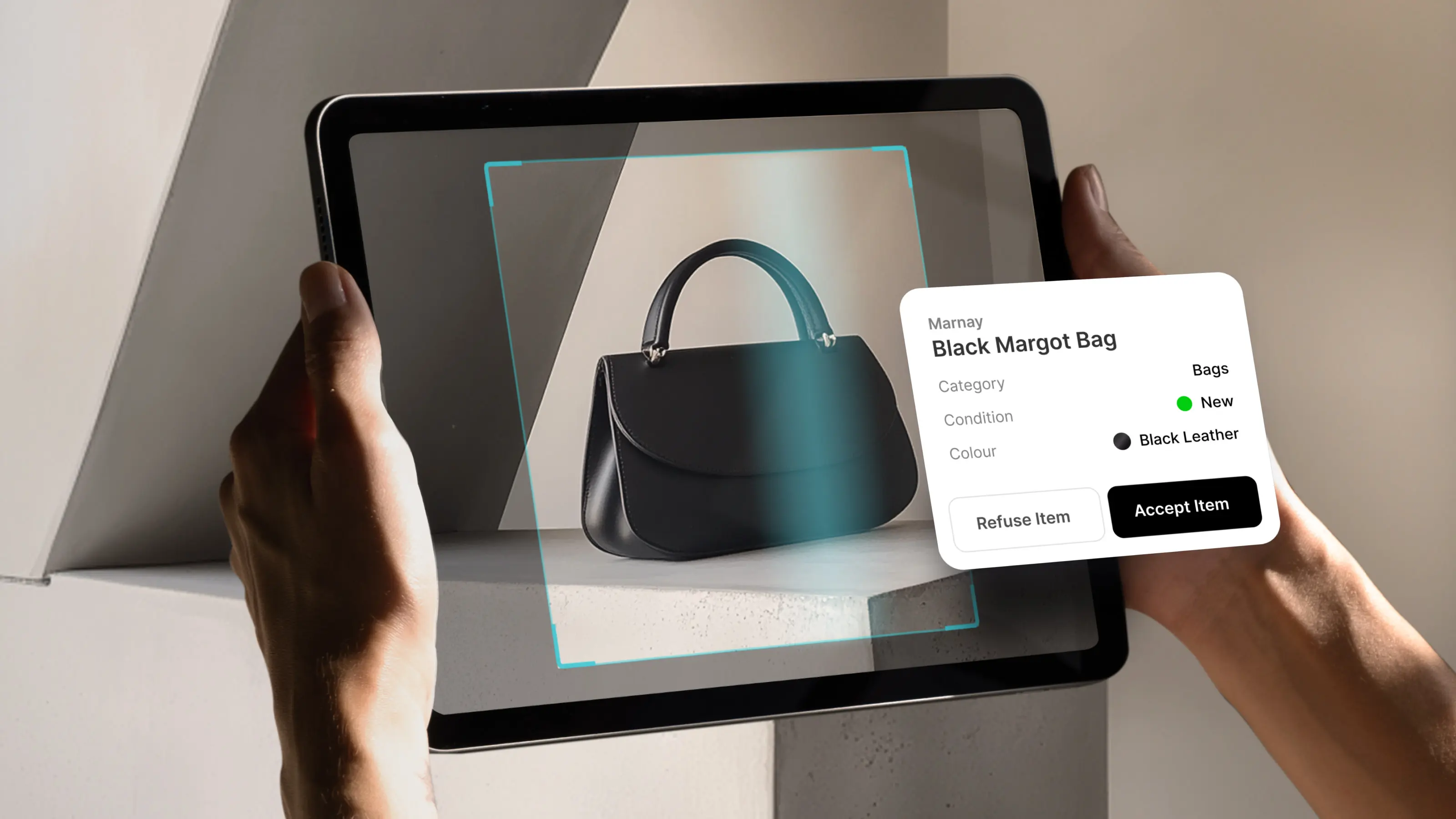

At Glide, the most practical AI we see our customers use works automatically in the background. It analyzes data and updates records without requiring a separate tool or interface. People just see the results in their existing workflows.

Design AI to augment, not replace

The real leverage isn’t in replacing people, it’s in amplifying them.

Most teams still think of AI as a replacement engine. Like something that could take over an entire workflow end-to-end. The logic seems sound: automate more, save time, cut cost. But that thinking misses where the real leverage is. The biggest gains don’t come from removing people; they come from amplifying them.

The teams getting the most out of AI aren’t automating everything. They're using AI to make their existing work faster and better, which lets them take on more complex tasks. AI is used to speed up judgment-heavy work, shorten feedback loops, and turn scattered knowledge into momentum. It’s not magic. Today’s models are powerful pattern machines, not independent operators. They can accelerate what you already do well, but still need context and guidance from humans who understand the work.

AI works best when it strengthens the parts of a process, not when it tries to rebuild the machine. It speeds up repetitive steps, clears bottlenecks, and helps people move faster and think more clearly. The result is smoother work, not the fantasy of work that disappears behind automation.

There’s a deeper layer to this. Each time you automate one part of the system, new layers of complexity appear. As tools improve, the scope of what your team can take on expands. That’s good. That’s growth.

The real opportunity is learning to design for that rhythm, helping your organisation get comfortable with constant expansion, where AI handles mechanical parts and people focus on the next layer that needs care and judgment.

If you’re in the middle of these conversations, make them concrete.

Instead of debating whether AI can “do it all,” or do “the whole of ___,” look at where it can add lift inside an existing process or make an existing person more valuable. Every workflow sits on a spectrum between manual and autonomous; the goal isn’t to jump to the end. It’s to move toward more capability and trust step by step.

That path might look like this:

1. Can AI help with a small part of [a specific task or role]?

2. Can AI help with larger parts of [a specific task or role]?

3. Could AI work in parallel with this person or team?

4. Can a system or manager orchestrate several of these AIs together?

Augmentation isn’t just realistic; it’s profitable. The biggest gains come not from replacing people, but from extending what skilled teams can do. The companies that win this next phase of AI adoption won’t be the ones with the fewest employees. They’ll be the ones whose people are equipped with the smartest tools, where judgment, creativity, and context are amplified by technology that understands the work instead of trying to replace it.

Integrate AI with your organization’s data

How do you put intelligence next to the data you already trust, without rebuilding everything or blowing up security?

Your data already exists. It’s already powering the systems and decisions your team depends on.

You don’t need to rebuild everything around it, and you probably shouldn’t. Most companies don’t have a data problem; they have a connection and interface problem. Information sits across sheets, databases, and tools that don’t talk to each other, and even when they do, people still struggle to see what matters.

The first, most practical opportunity for AI isn't to replace those systems. It's to surface insights, actions, and next steps through them by connecting the data you already trust and presenting it intelligently in the right place, at the right time, and in the right context.

When you’re guiding these conversations, keep them grounded in what’s safe and specific. Talk about starting small, maybe targeting a duplicated data source or a subset of data you already back up and control. Or focus on read-only access first, where AI can summarize and surface insights without touching the source. Your tools should let you define those boundaries.

Conversations with IT or security will stall fast if the system you’re using demands unrestricted access to mission-critical data.

That’s what data-driven design really means: building visibility, interfaces, and intelligence around the data you already have.

Build AI systems that last

Prototypes are easy. Reality is hard.

We're inundated with demos in adverts or on LinkedIn. But production environments have gravity, data standards, security reviews, user access, dependencies, and deadlines. They expose fragile systems fast.

The way to make AI stick is to build on stable foundations. Use platforms that already handle the unglamorous work: authentication, caching, version control, uptime, and deployment. The goal is not to rebuild those pieces every time you try a new idea, but to layer intelligence on top of what is proven.

AI that is boringly reliable will outperform AI that is impressively fragile every time. Flashy experiments come and go, but dependable systems quietly compound. The work that lasts is rarely the loudest; it is the work that keeps working.

Build an AI agent

Learn howGet started with a small, actionable first step

AI is everywhere now, but most of it doesn’t stick. It’s easy to build a demo. It’s much harder to build something people rely on.

The teams that make it work don’t chase every new release. They stay close to their real workflows, the ones that already matter. They use AI to remove friction, not to start over. Over time, that kind of thinking adds up. Systems get steadier. People make better decisions. The work starts to compound. That’s what lasting AI looks like.

And we can say what it looks like, because we’ve seen it happen thousands of times in the organizations that build with Glide. AI is really a battle of small victories. Instead of 1x AI feature that improves efficiency by 50%, it’s nearly always 50x AI features that each improve efficiency 1%.

It’s not dramatic. It’s dependable. And it helps teams spend more time on the parts of work that are still deeply human.