Generate software with AI

Create custom business software with a text prompt.

Introduction

One of the recent projects we've been working on at Glide is an AI app generator. Given a plain-English prompt, it generates a schema, some data, and a basic Glide App.

This project started out as a question: how can we make it easier for new users to learn Glide? Sometimes users come to Glide with specific ideas, and other times they just want to experiment. Either way, if they’re they're unfamiliar with some of the nuances and mental models associated with Glide, they may not know how to get started (for example, how to structure their data or how to use advanced features like relations, rollup columns, and user profiles). Ultimately, they may get frustrated trying to make Glide do what they want.

How can we make it easier for these new users to pick up some of the concepts they’ll need to be successful with Glide?

Basic structure of a Glide App

For context, it’s first worth describing the basic structure of a Glide App. In Glide, apps often use data that lives in a data source like Google Sheets or Glide Tables. For example, a grocery-tracking app might use an `Ingredients` table, where each row describes an ingredient. This table might have columns like `name`, `cost`, `color`, etc.

Glide can derive complex relationships between the tables, columns, and rows that drive an app. For example, a recipes app might have an associated `Recipes` table, and each item in that table might reference multiple rows in the just-mentioned `Ingredients` table (for example, the recipe for a ham and cheese sandwich might reference the `ham`, `cheese`, and `bread` rows of the `Ingredients` table). In Glide, we call this a relation, and it’s just one type of computation we can run on an app’s underlying data (others include rollups, template strings, and more).

A Glide App is intertwined with its data source. For example, changing the data in Google Sheets changes the data that shows up in your app, and vice versa. In fact, Glide uses the schema of your data source (its tables and columns) to generate a default app.

If you structure your data in a specific way, Glide can produce relatively intelligent default apps. And, as you work with multiple Glide apps, you’ll build up an intuition for the kinds of schemas that Glide finds meaningful. However, new users won’t have that knowledge or intuition, and this can trip them up.

This project’s goal is to give new Glide users a starting point. No matter if they have a specific idea or just want to experiment, we can use the short description they provide to generate a relevant data schema, some data for it, and a basic app they can use to start exploring Glide. And, as they play with the app, they’ll get a better understanding of how to structure their data in ways that unlock some of Glide’s more complex features, like relations.

The challenge, though, is to use that short, plain-English description to generate a relevant data schema and its associated data.

GPT-3?

We had an inkling that GPT-3 might be a good candidate for doing this given its superb text completion, so we gave it a try. Initially, we started very simple - could GPT-3 output just a relevant schema given a prompt?

Given its superb text completion abilities, we had an inkling that GPT-3 might helps us solve this problem. So, we gave it a try, starting simple: given a prompt, could GPT-3 output a relevant schema?

At first, the answer was no. Over time, though, we found that we could get higher quality results by priming the base, OpenAI models with good, clear examples. There are many nuances to prompt engineering (the process of finding the best prompts to give GPT-3)—your prompts "program the model," and poor or unclear examples yield bad results.

In particular, we found that making the most complicated parts of the task simple, clear, and straightforward most significantly improved the quality of our results. As we learned, GPT-3 is essentially like a 3-year-old that’s trained on the entire internet.

Slowly, after a couple of examples, we got the hang of it:

Prompt

description: I want an app that manages orders for my customersresponse: You will need a table for storing customer information, a table for order information, and a table for products. Each row in customer information should have a column for name, e-mail address, and phone number. Each row in order information should have a column for id, cost, and product id. Each row in products should have an id and cost. description: I want an employee directory that contains information about my employees and where they work.description: I want an employee directory that contains information about my employees and where they work.Result

response: You will need a table for storing employee information, a table for storing employee work location, and a table for storing employee phone numbers. Each row in employee information should have a column for name, e-mail address, phone number,Not bad! But, we needed results with more structure. So, we tried this:

Prompt

Q: I want an app that manages orders for my customers.A: Tables: Customer Information (with columns for name, e-mail address, phone number), Order Information (with columns for id, cost, list of product ids)Q: I want an employee directory that contains information about my employees and where they work.A: Tables: Staff (with columns for name, title, phone number, e-mail, location), Locations (with columns for name, address, list of people who work there.Q: I want an app that contains information to track issues across different buildings.A: Tables: Buildings (with columns for name, phone, address, issues), Issues (with columns for subject, notes, building name)Q: I want an app to manage my basic expenses.Result

A: Tables: Accounts (with columns for month, amount, category), Expense (with columns for type, amount)Q: I want an inventory app to track the items I have in stock.A: Tables: Inventory (with columns for item_id, date_taken, item name), Items (with columns for item_id, name, quantity)Better! We could use these results.

This work confirmed our first hypothesis: GPT-3 can generate relevant data schemas (tables and columns). But can it generate data, too?

The answer here turned out to be a little more unclear. However, for a single-table schema, GPT-3 can usually output a couple of rows of decent data:

Prompt

Q: Customer Information name | e-mail address | phone number |A: Jane Smith | jane@gmail.com | 402-784-9003 | John Doe | john@gmail.com | 168-302-4023 | Mark Matthew | mark@gmail.com | 583-234-4391 |Q: Staff name | title | phone number | e-mail | location |A: Brandy Fry | Manager | 479-940-2310 | brandy@gmail.com | Menlo Park | Kay Kramer | Software Engineer | 234-284-2039 | kay@gmail.com | Midtown Atlanta | Rebecca Johnson | Product Manager | 129-203-1301 | rebecca@gmail.com | Menlo Park | LocationsQ: Buildings name | phone | address | issues |A: Buildings Sunflower | 123-402-3012 | Battery Street | Broken AC, Poor Insulation | Tulip | 302-194-2034 | 767 NW Street | Broken AC |Q: Order Information id | cost | list of product ids |Result

A: 1 | 100 | {(1, 2, 3)} | 2 | 200 | {(4, 5, 6, 7)} | 3 | 300 | {(8, 9, 10, 11)} |Another, more promising result:

Prompt

Q: Customer Information name | e-mail address | phone number |A: Jane Smith | jane@gmail.com | 402-784-9003 | John Doe | john@gmail.com | 168-302-4023 | Mark Matthew | mark@gmail.com | 583-234-4391 |Q: Staff name | title | phone number | e-mail | location |A: Brandy Fry | Manager | 479-940-2310 | brandy@gmail.com | Menlo Park | Kay Kramer | Software Engineer | 234-284-2039 | kay@gmail.com | Midtown Atlanta | Rebecca Johnson | Product Manager | 129-203-1301 | rebecca@gmail.com | Menlo Park | LocationsQ: Buildings name | phone | address | issues |Result

A: Menlo Park | 479-940-2310 | 1149 Cheshire Bridge Rd NE, Atlanta, GA, 30324 | flooding Paulo Alto | 293-294-2992 | 1285 Dodds Avenue, Atlanta, GA, 30324 | flooding Midtown Atlanta | 234-284-2039 | 1030 North Avenue, Atlanta GA 30309 | floodingQ: Appointments staff | date | time | description |A: Dixie Gale | Friday, February 16 | 9:00am | meeting Brandy Fri | Friday, February 16 | 9:00am | meetingFrom these experiments, we noticed that GPT-3 struggles when generating data for two or more tables, especially when there’s a relationship between them (for example, the Ingredients and Recipes tables described above).

Our First Prototype

Our experiments weren’t conclusive, but they were strong enough to encourage us to build a prototype:

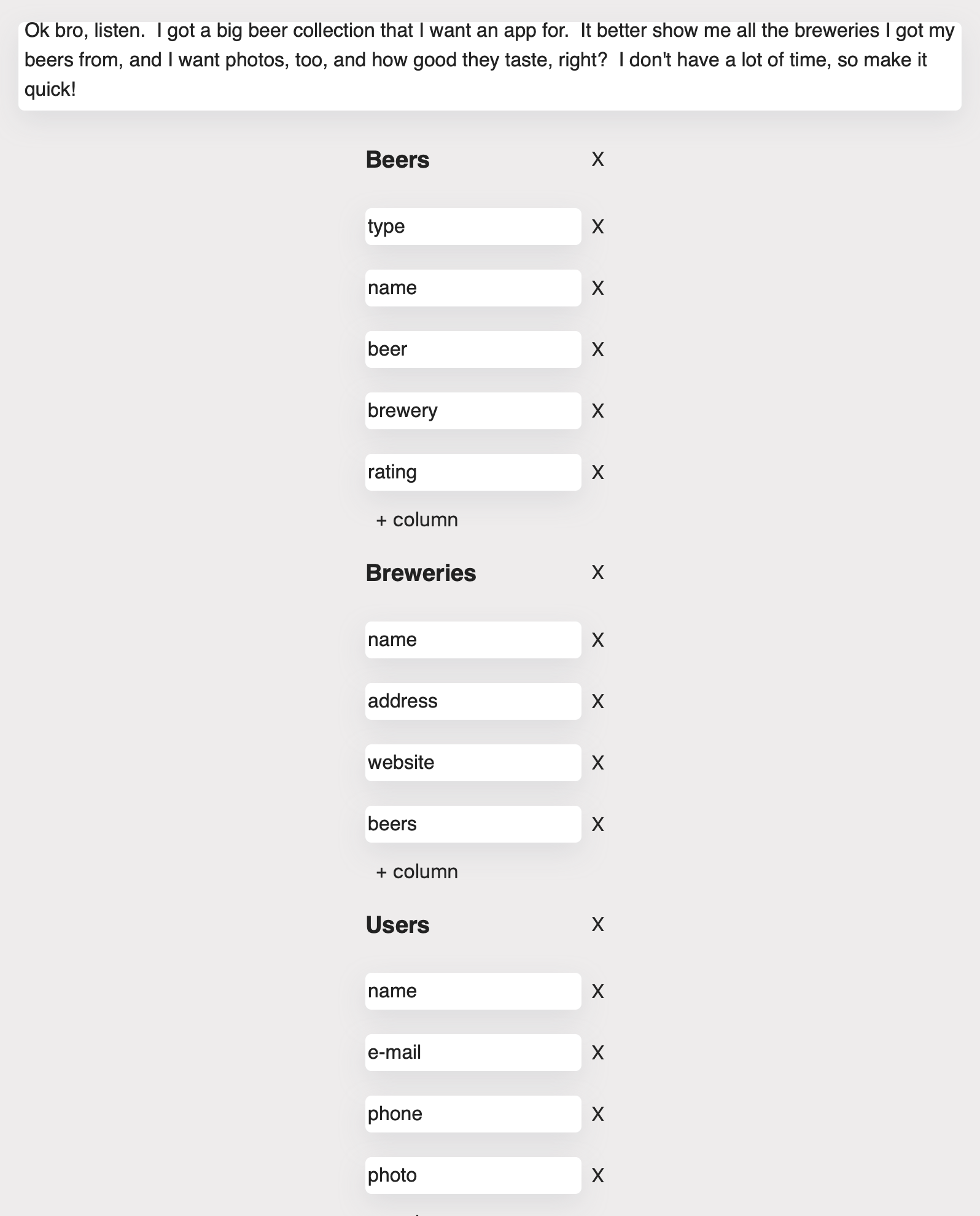

The prototype started out simple: enter a description for an app, and it generates a possible schema. Each title is a table, and each text box is one of its columns.

We started playing around, having fun with the tool and its output:

In examples like these, we noticed GPT-3 implicitly detecting relations. Here, for example, the last column in the `Breweries` table is `beers`—which happens to be another table. This was a promising result, and we decided to run with it.

Detecting data types and generating data

Next, we needed to figure out how to detect column data types and generate data (including data thats spans across tables).

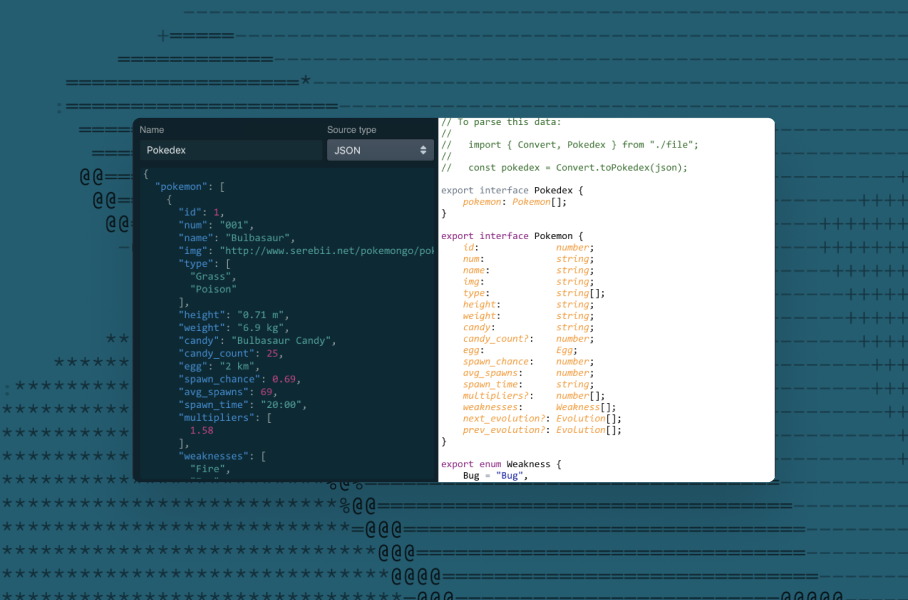

Detecting data types

Detecting data types turned out to be easy, since we could just add data types to the examples we gave to GPT-3. For example:

Q: I want an app that manages orders for my customers.A: Tables: Customer Information (with columns for name<string>, e-mail address<string>, phone number<number>), Order Information (with columns for id<string>, cost<number>, list of product ids <Products>), Product (id<string>, cost<number>)Q: I want an employee directory that contains information about my employees and where they work.A: Tables: Staff (with columns for name<string>, title<string>, phone number<number>, e-mail<string>, location<string>), Office Locations (with columns for name<string>, address<string>, list of people who work there<Staff>.Q: I want an app that contains information to track issues across different buildings.A: Tables: Buildings (with columns for name<string>, phone<number>, address<string>, list of issues<Issues>), Issues (with columns for subject<string>, notes<string>, building name<string>, date <date>)Q: I need an app to track my gym workouts.A: Tables: Workout (with columns for date<date>, duration<number>, list of exercises<Exercises>, weight<number>, notes<string>), Gym Exercises (with columns for name<string>, description<string>, weight<number>).Q: I want to build an app tracks my Lego sets.A: Tables: Lego Sets (with columns for name<string>, number<number>, color<color>), Lego Pieces (with columns for name<string>, cost<number>, list of pieces<Pieces>)We could prime GPT with different sets of these inputs, each one specifying a set of tables, columns, and data types.

Generating Data

We decided to generate data for one table at a time. For prompts that produced schemas with multiple tables, we used previous tables as input for the next ones. This helped ensure high table quality (since GPT-3 tends to give worse results for longer input), and it gave GPT-3 the data it needed to generate relations.

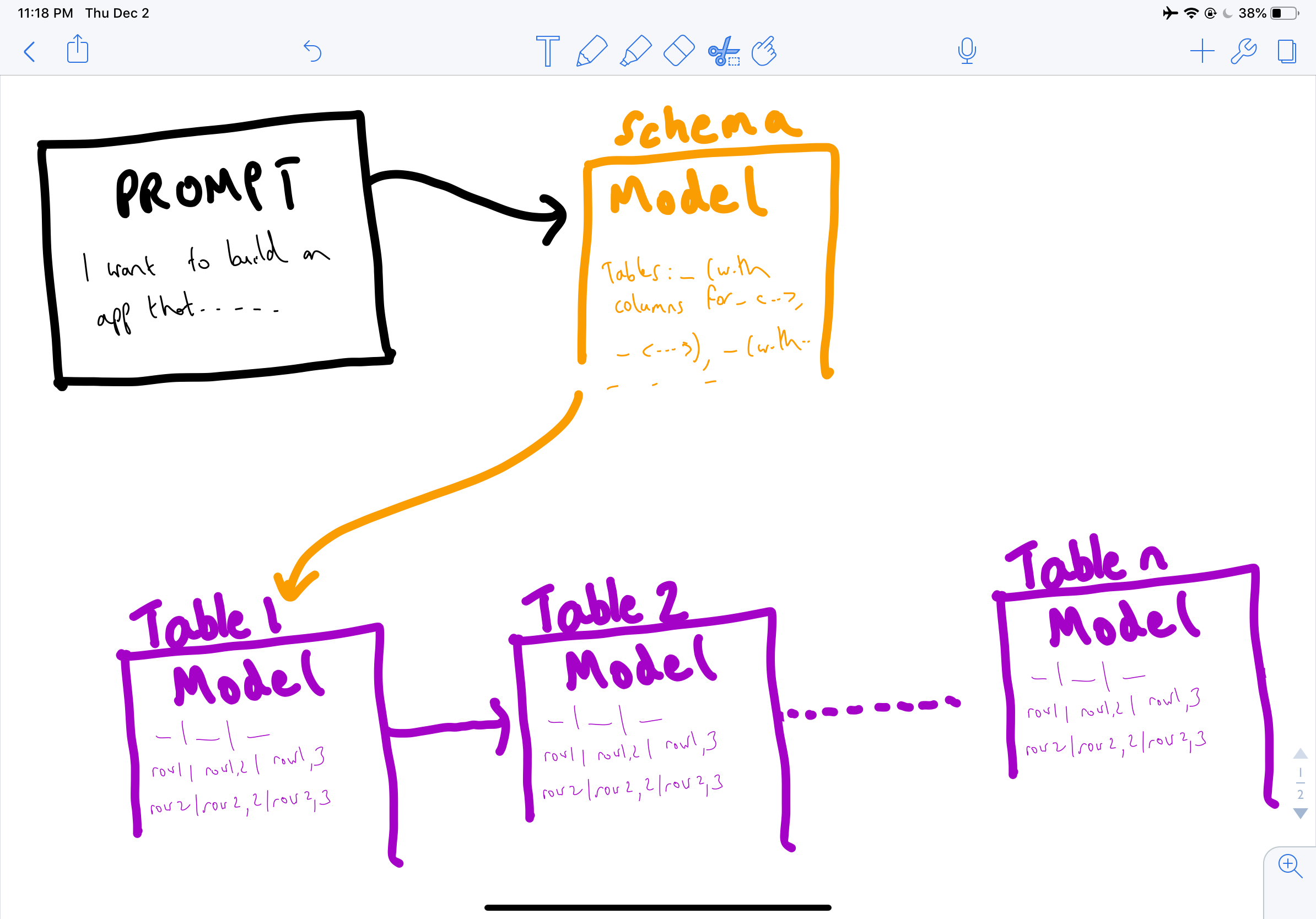

The entire flow looked something like this:

To start, we used the DaVinci model with specific inputs for the schema (orange box), and a different inputs for the data (purple boxes). For subsequent tables, in addition to the same set of inputs, we'd feed in the results of the previous tables (as explained above).

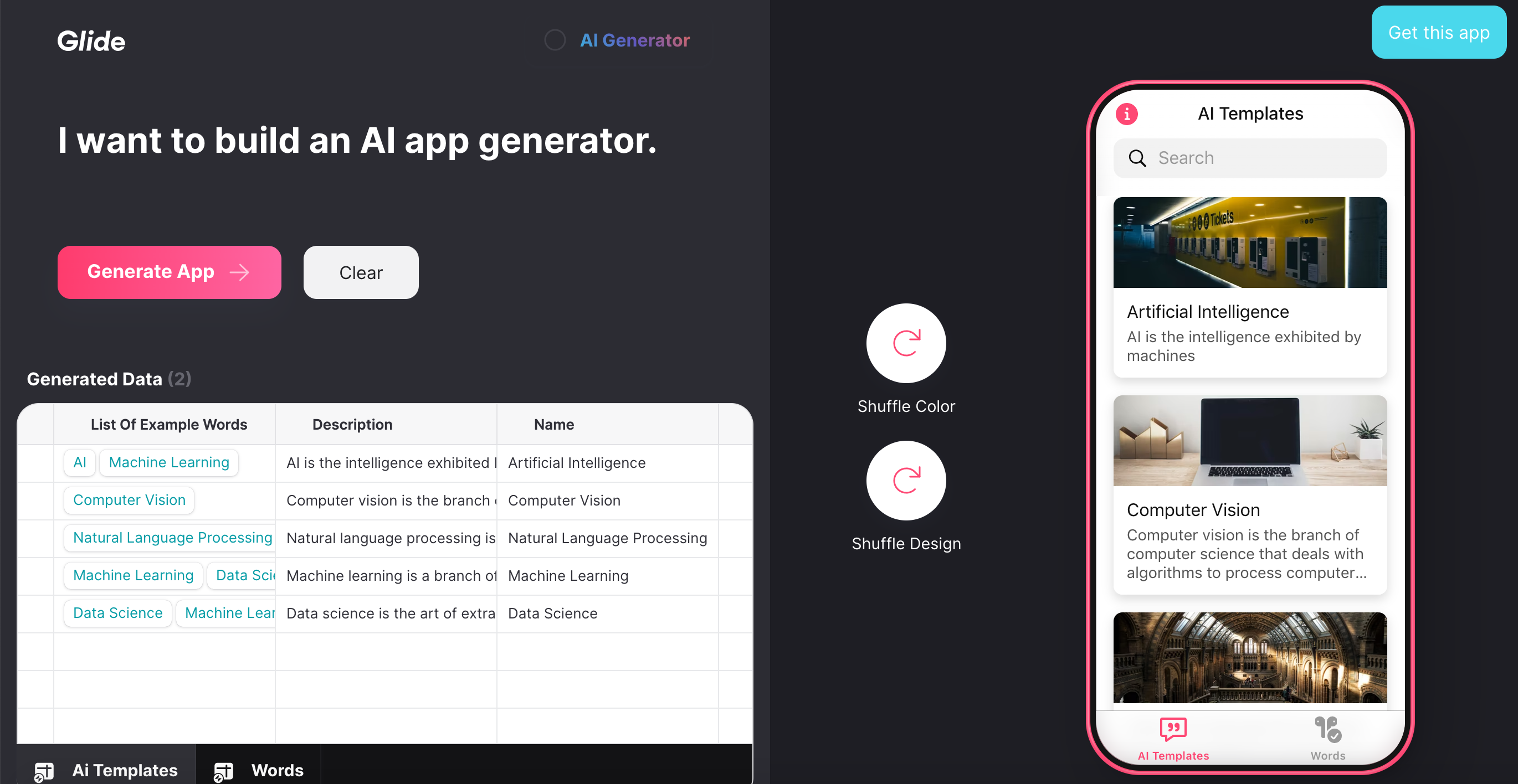

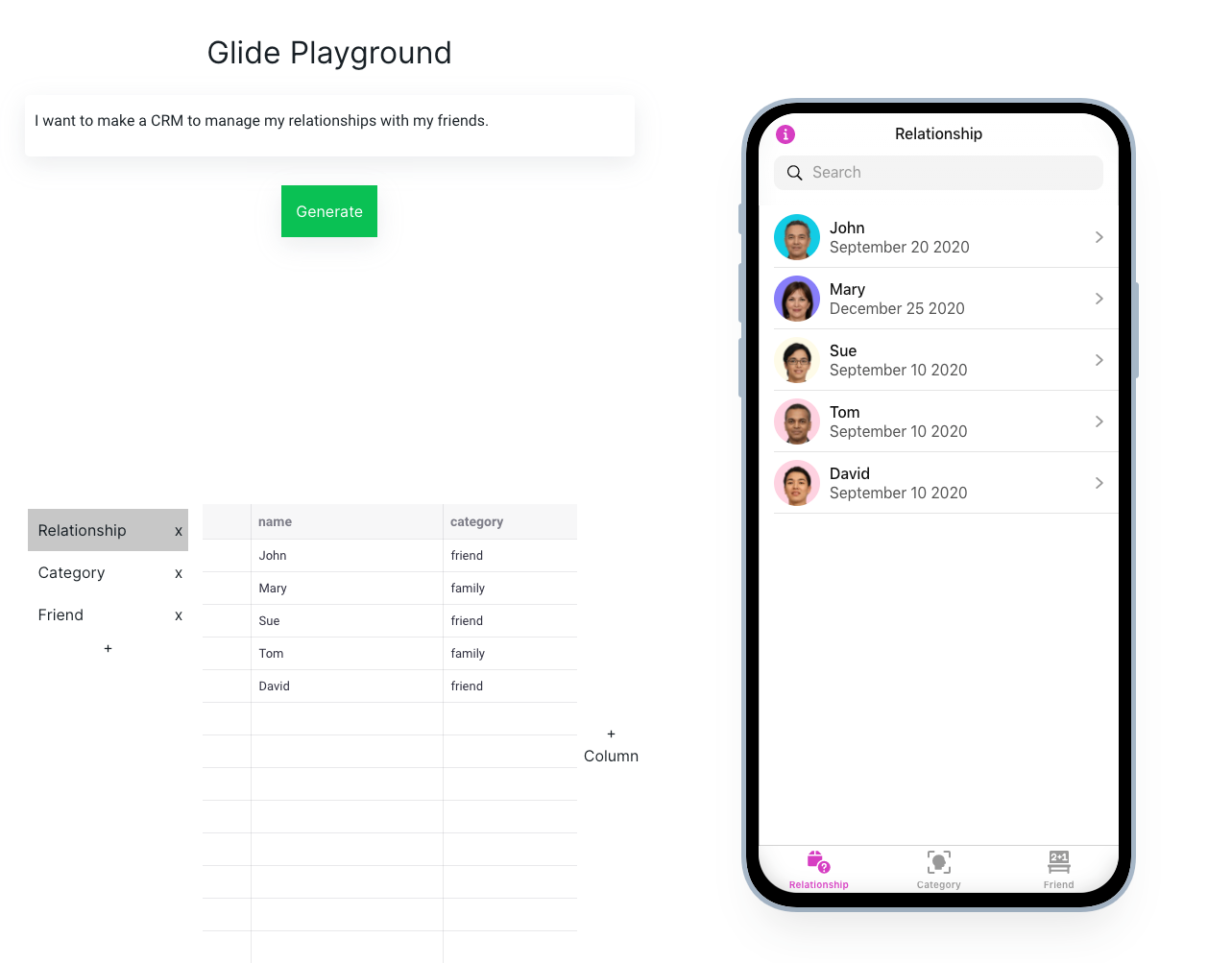

A couple of days later, we had our first working prototype:

And, after iterating a little bit on the primed inputs, we had a slightly better version:

Interestingly, although our hypothesis to generate tables one at a time made sense in theory, the actual result was brittle and inconsistent. The schema generation was usually quite good (or at least acceptable), but generating a couple of rows of domain-specific data was challenging—especially with multiple tables and relations involved.

Clearly, our project had promise. The prototype was imperfect and brittle, but it was fun to play with and had potential. How we integrate this project more closely with Glide?

Integrating with Glide

Next, we decided to pull our project into Glide’s codebase, rather than running it as a separate project. And there were things to improve and add, too:

- Data generation needed to be much more stable. This meant more experimentation with GPT-3, trying to figure out the best way to structure the problem. One key takeaway: building complicated applications on top of GPT-3 is mostly about figuring out how to structure or split up your units of work into logical chunks. If you ask too much of GPT-3 all at once, it will struggle. But if you ask too little, you won’t take advantage of its full potential.

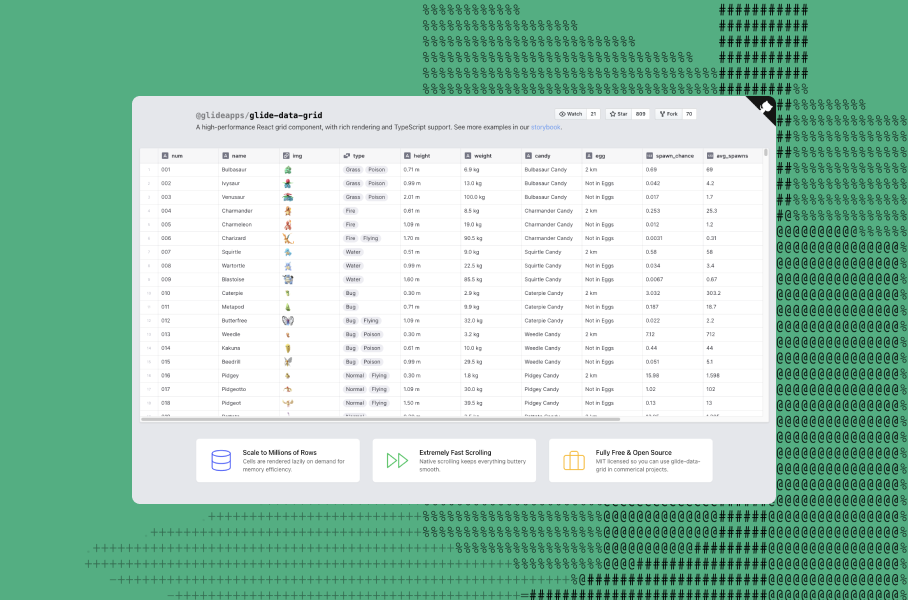

- We wanted to use Glide’s powerful, in-house, canvas-based data editor, to display the generated data.

- We still had to create a Glide app from the generated schema and data. Glide has some pretty good heuristics it uses to generate a default app for a given schema, so we planned to use them, but with a bit of work to add more functionality and clean things up.

Using Glide’s in-house data editor

Since it wasn’t clear how we’d make the GPT-3 results more stable (this would require major changes to our initial pipeline and to our parser), we started by integrating Glide’s data editor into our prototype. A week or two later, we had most of the functionality of our first prototype running inside Glide’s codebase. For a given description, it could generate a schema and some data, and then show the results in Glide’s data editor:

Making GPT-3 Generation More Stable

After we had this basic functionality working inside of Glide, we revisited our GPT-3 experiments. We tried various things, but we eventually settled on doing all of the schema and data generation in a single completion. With this approach, the inputs used to prime GPT-3 looked like this:

Q: I want an app that manages orders for my customers. Tables: 1. Customer Information (with columns for name<string>, e-mail address<string>, phone number<number>), 2. Order Information (with columns for id<string>, cost<number>, list of product ids <Products>), 3. Product (with columns for id<string>, cost<number>)A: 1. Customer Information name | e-mail address | phone number Jane Smith | jane@gmail.com | 402-784-9003 John Doe | john@gmail.com | 168-302-4023 Mark Matthew | mark@gmail.com | 583-234-4391 Dixie Gale | dixie@gmail.com | 481-231-3349 Natalie Vu | natalie@gmail.com | 921-291-2934 2. Product id | cost 0 | $5 1 | $10 2 | $20 3 | $15 3. Order Information id | cost | list of product ids a | $15 | {0, 1}b | $25 | {0, 2}c | $35 | {2, 3}d | $30 | {1, 2}e | $35 | {0, 1, 2}###Q: I want an employee directory that contains information about my employees and where they work. Tables: 1. Staff (with columns for name<string>, title<string>, phone number<number>, e-mail<string>, location<string>), 2. Office Locations (with columns for name<string>, address<string>, list of people who work there<Staff>).A: 1. Staff name | title | phone number | e-mail | location Brandy Fry | Manager | 479-940-2310 | brandy@gmail.com | Menlo Park Kay Kramer | Software Engineer | 234-284-2039 | kay@gmail.com | Midtown Atlanta Rebecca Johnson | Product Manager | 129-203-1301 | rebecca@gmail.com | Menlo Park Olivia Rodrigo | Principle Software Engineer | 289-492-3994 | olivia@gmail.com | Paulo Alto Santiago Gonzalez | Manager | 293-294-2992 | santiago@gmail.com 2. Office Locations name | address | list of people who work there New York | 15 East Clark Rd New York, NY 10024 | {Brandy Fry, Kay Kramer}San Francisco | 794 Mcallister St San Francisco, California, CA, 94102 | {Olivia Rodrigo, Santiago Gonzalez}Miami | 467 SE. Birchwood Ave. Tallahassee, FL 32304 | {Rebecca Johnson}###Q: I need an app to track my gym workouts.A: [..GPT-3's completion....]This approach wasn’t perfect, but it was the best option we tried. Unfortunately, as we previously realized, asking too much of GPT-3 can cause more problems than it solves. When it's hard to break work into smaller units, and you ask GPT-3 to perform a complex task all at once, it can produce strange failure modes and give you bad results.

Some problems we were seeing:

- Data not relevant to the prompt, or that didn’t match the schema.

- Missing, incomplete, or badly structured schemas.

- GPT-3 blowing up and returning gibberish.

Switching our pipeline to a single function call simplified and stabilized the pipeline in some ways, since there were fewer moving pieces—but it turned out to be a double-edged sword. Asking too much of GPT-3 at once greatly increased the number of possible failures in the resulting completion. We found that, between generating a good schema, inferring relations, and generating data that abided by schemas, there were so many ways that GPT-3 could go wrong. And there were only so many safety nets we could implement when parsing a completion.

Our next bet was tofine tunethe models on a small dataset of good examples. Until this point, our models were using the standard completion endpoint, where you provide a couple of examples to prime your model each time you called the OpenAI API. Given how large these models were, and how much we were asking them to do, we figured that a few more good examples might go a long way toward improving the results. Also, this would greatly reduce our costs, since we wouldn't have to waste tokens on the same primed examples every time (GPT-3 charges you based on the number of tokens in your input and the resulting completion). When you fine-tune a model, you can provide far more examples to GPT-3 (since you don’t have to constrain your input to their 2048 token limit per API call), and you don’t have to prime your model with the same inputs on each API call. Calling the API with your fine-tuned model uses the same weights from your dataset—just provide the prompt, and OpenAI will give you the resulting completion.

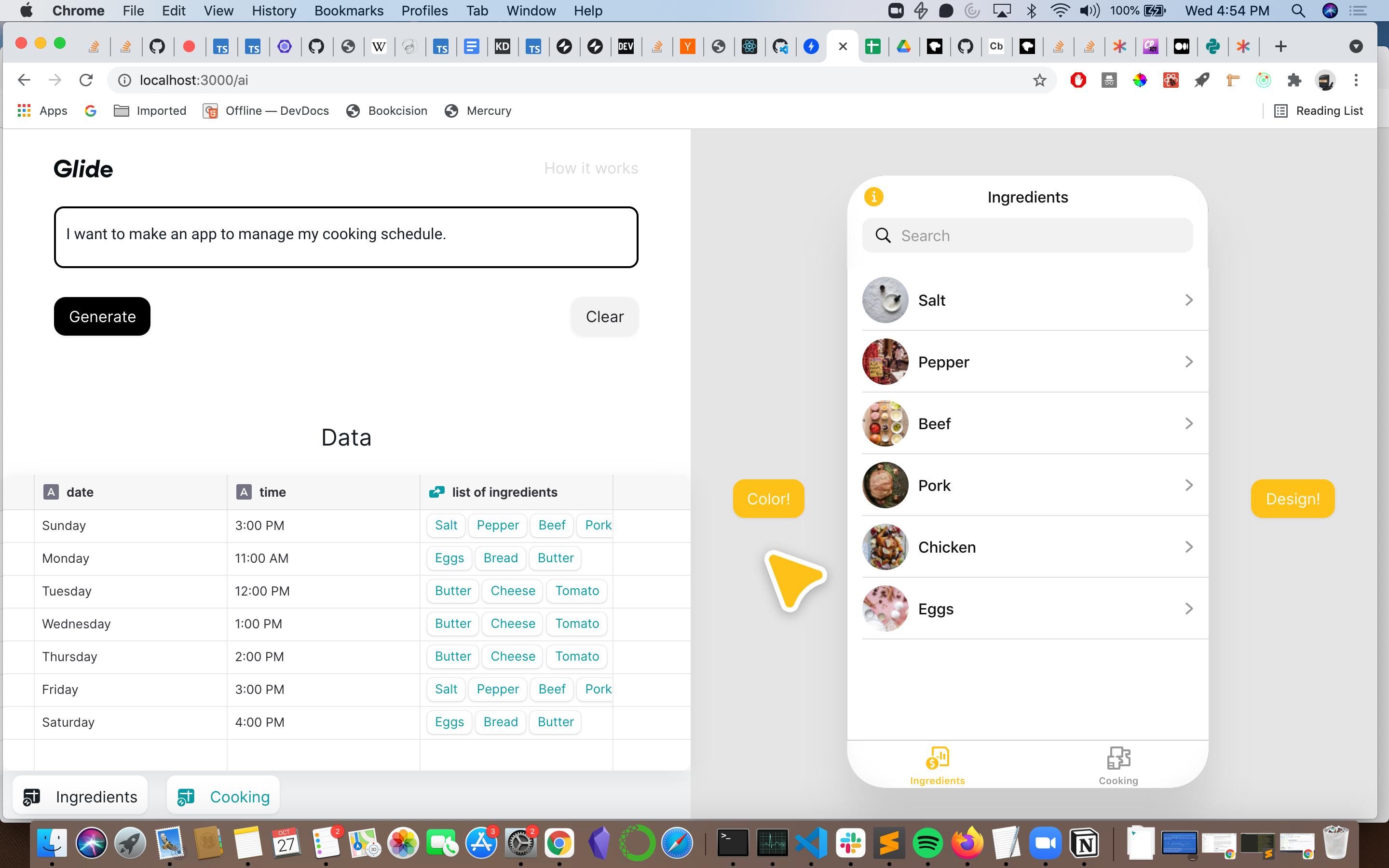

We started with ~17 examples (prompts and their associated completions), and we used the Curie model (the second largest and most capable model). Prompts consisted of app descriptions like "I want to build an app to manage my cooking schedule." Completions consisted of the corresponding schemas and data. The results were noticeably better, at a fraction of the cost.

However, GPT-3 still broke regularly, and we decided to come back to it later. We’d made improvements, but it wasn’t anywhere near as stable as we wanted.

Generating a Glide App

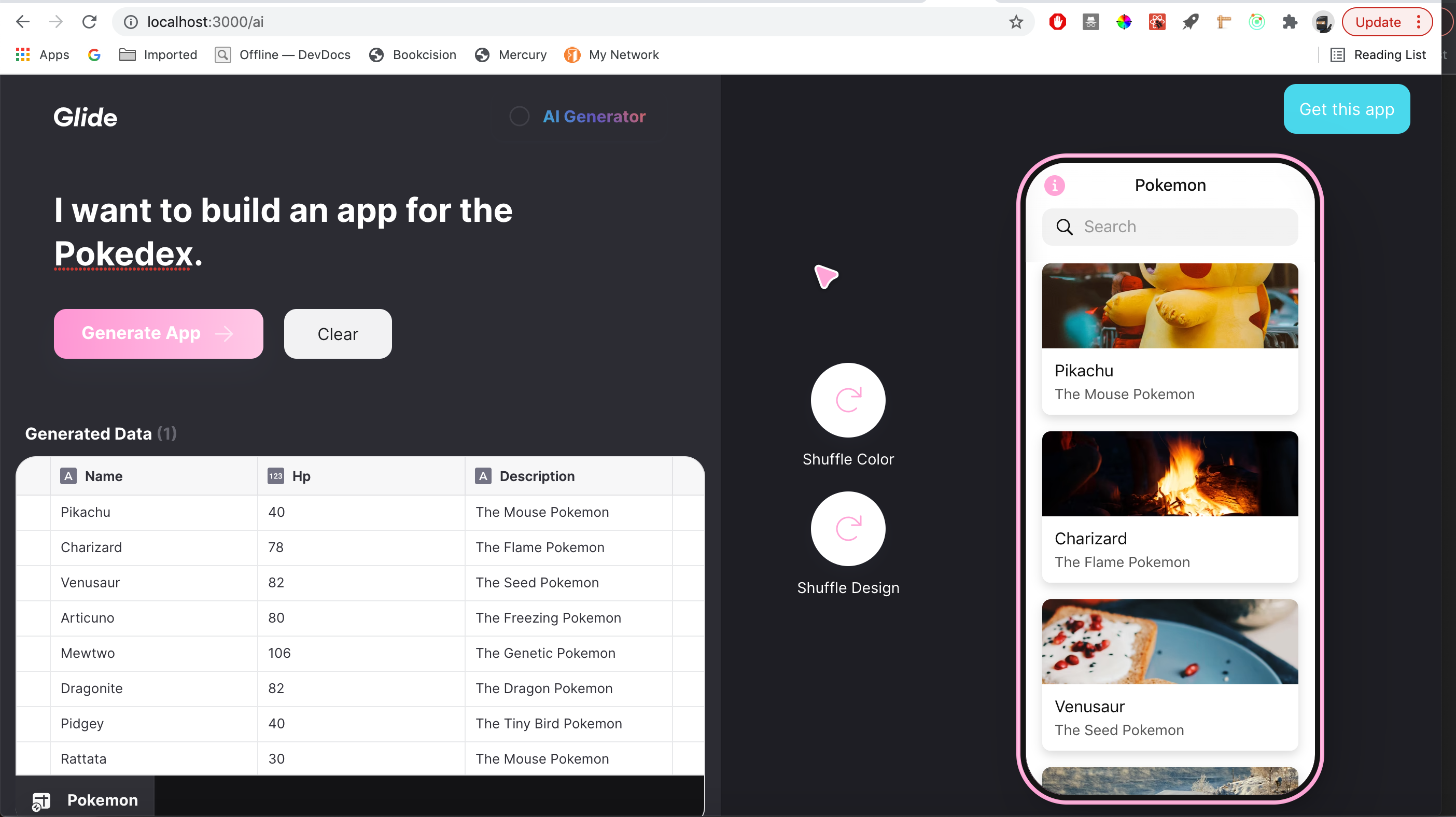

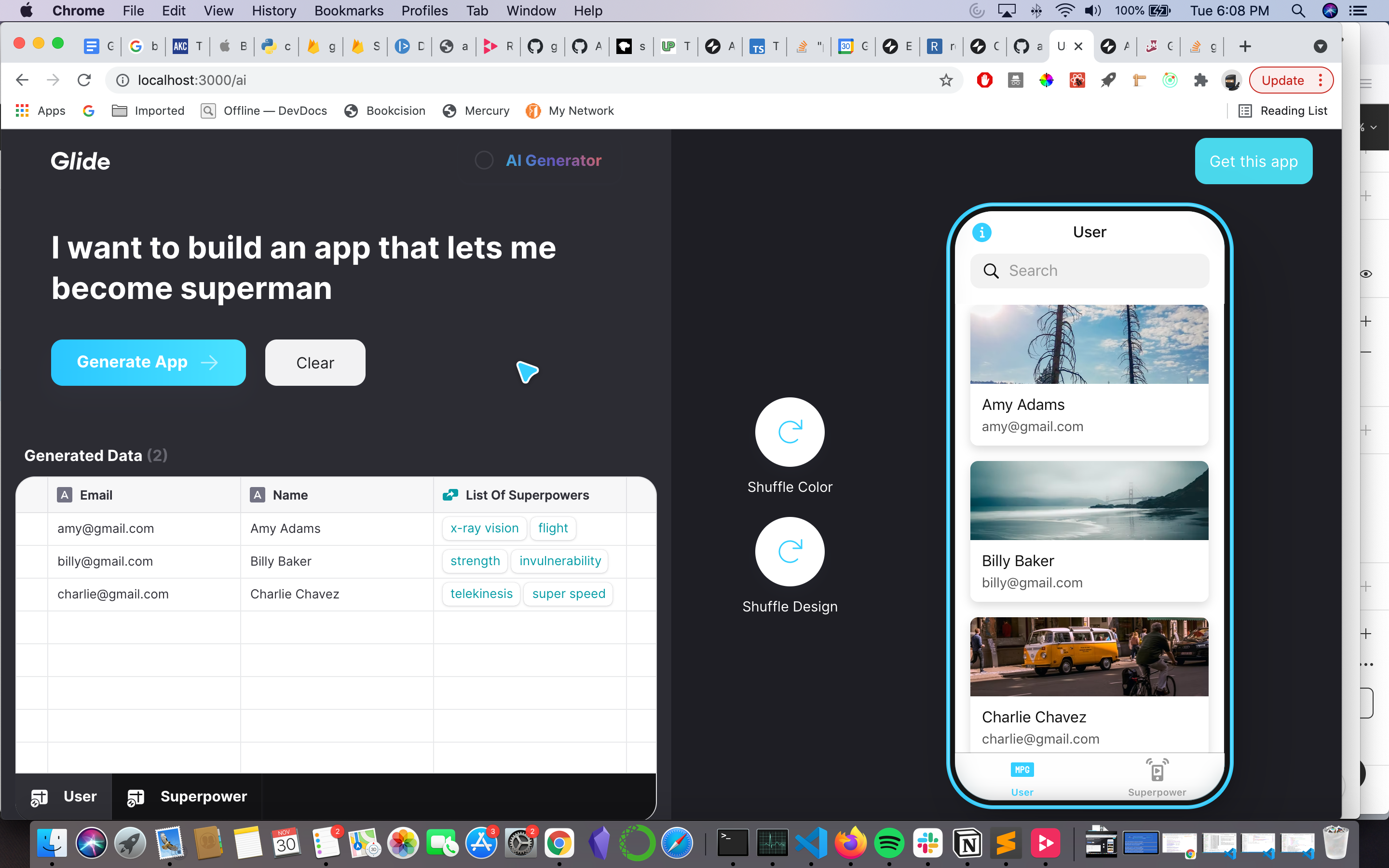

Our next goal was to generate a Glide app. After a couple of weeks of effort, we’d done it: we could generate a fully functioning Glide App based solely on a user’s description.

In this demo, notice how we infer and display relations in the app. When we click on a row in the `Cooking` table, it displays the screens for all of the related `Ingredients`. Very cool!

Next, we made it possible to for users to change the app’s color theme, helping them make their generated apps their own:

We also started finding and choosing relevant images for each result. For example, for apps with people-related data, we could show Glide’s AI-generated avatar images.

Design

At this point, the functionality was improving steadily, so we decided to pivot and focus a little more on the design.

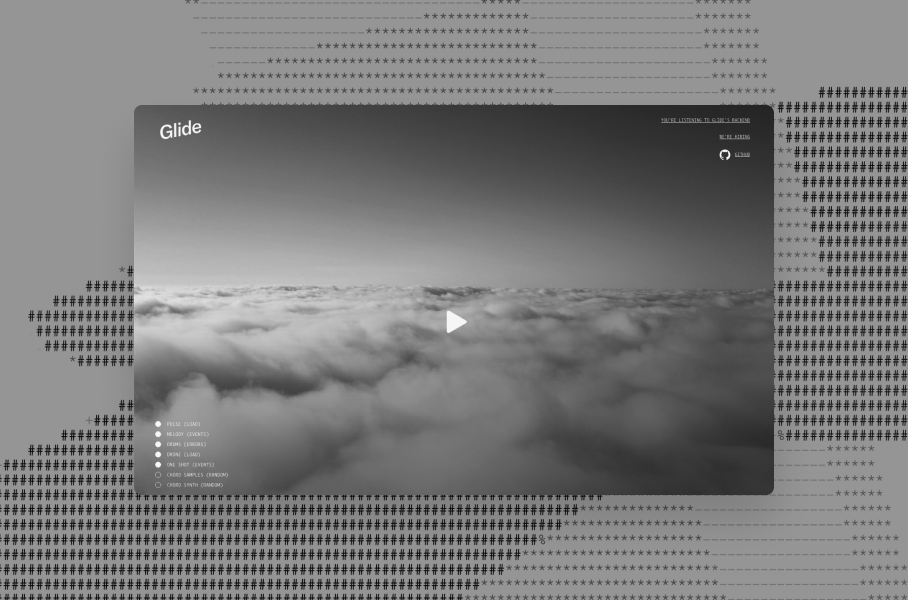

The design went through many different configurations. We wanted the experience to feel both futuristic and elegant, but also fun and playful. Making great software requires both.

Here were some design mockups that we played with:

And in the actual product, we tried a couple of different experiences.

One of the fun features that came out of this was a cursor that changed color with the app!

Eventually, we settled on this:

Wine & Glide

We took a short break to present a live demo of our project at Glide's Wine & Glide eventand everyone was super excited! There were lots of other cool projects, definitely check it out:

Improving our models

But, back to the problem at hand. With the design semi-polished and most of the functionality in place (minus a couple of features), we circled back, once again, to the brittle and unstable model.

Our first hypothesis was that the models needed more data. So we spent a week adding more data, bringing the dataset to around 60, hand-made, good examples. In context of most AI datasets, this is a tiny amount of data—but it was still a 3x improvement, and we were interested to see how it would affect data generation.

Unfortunately, we were surprised and disappointed that the models largely didn’t improve. We even tried fine-tuning the largest and most capable model, and the results were still incredibly brittle.

Overall, we learned that—because of the complex nature of this problem—good, reliable, stable completions would require a very large amount of data. We can't be sure, but we suspect that an order of magnitude (or two) more data might improve the completions.

We didn’t have that much data, though, so we had to work with what we had.

Final Features

Next, we worked on adding some final missing features:

- A safety filter to remove harmful or toxic completions:

- The ability to open a generated app in the Glide builder, so users can keep customizing it:

- Cute defaults to display when GPT-3 crashes (a puppies app, a kitties app, etc.)

- Other things here and there, and lots of bug fixes

Improving Our GPT-3 Parser

We also spent some time tweaking our parser to handle as many GPT-3 failure modes as possible. There was no possible way to catch everything, but we tried to cover as much as possible—missing columns, invalid relations, empty tables, mismatched data, you name it.

So, instead, we focused on making the parser as robust as possible. The result was a much more stable parser that covered many, many edge cases, and failed gracefully with a cute default app when things went wrong.

As a result, the parser become progressively more complex. Had we split schema and data generation into smaller chunks of work, this may not have been the case—but the nature of this problem made that difficult to do (and even when we tried that, in the first version of our prototype, the results weren’t great).

Lessons learned

We learned many things from this project. Here are some key takeaways:

- Building a complicated application on top of GPT-3 is surprisingly non-trivial. You’re somewhat at the mercy of its weakest completions and strange failure modes. The results can be complete gibberish, or funny and delightful.

- Most of the hard work comes down to figuring how to structure your tasks for GPT-3, and the common language you use to interact with it. The examples you use to prime it are important, and structuring those examples in a clear, concise, and useful way is even more important.

- If you're building an application that has to parse structured data from a completion, it will be a difficult challenge—especially if you need the completion to be represented in some common format or language. Building a robust parser for a semi-structured language with confusing failure modes is hard and messy. If you’re building something that doesn’t require you to parse completions (maybe a chatbot, for example), you’re in luck.

- GPT-3 is incredibly smart and incredibly dumb at the same time.

- Using AI-augmented software can be amazingly fun. We can't count the number of times we were rolling on the floor with laughter at how awesome the generated completions were. Often, they didn't produce great results, but when they did it was delightful.

This experiment was both a success and a failure, for many reasons. We learned a lot, and we're excited to keep exploring how AI can make building software with Glide even better!

P.S if you'd like to try our AI playground out, it's accessible at [https://staging.heyglide.com/ai](https://staging.heyglide.com/ai)

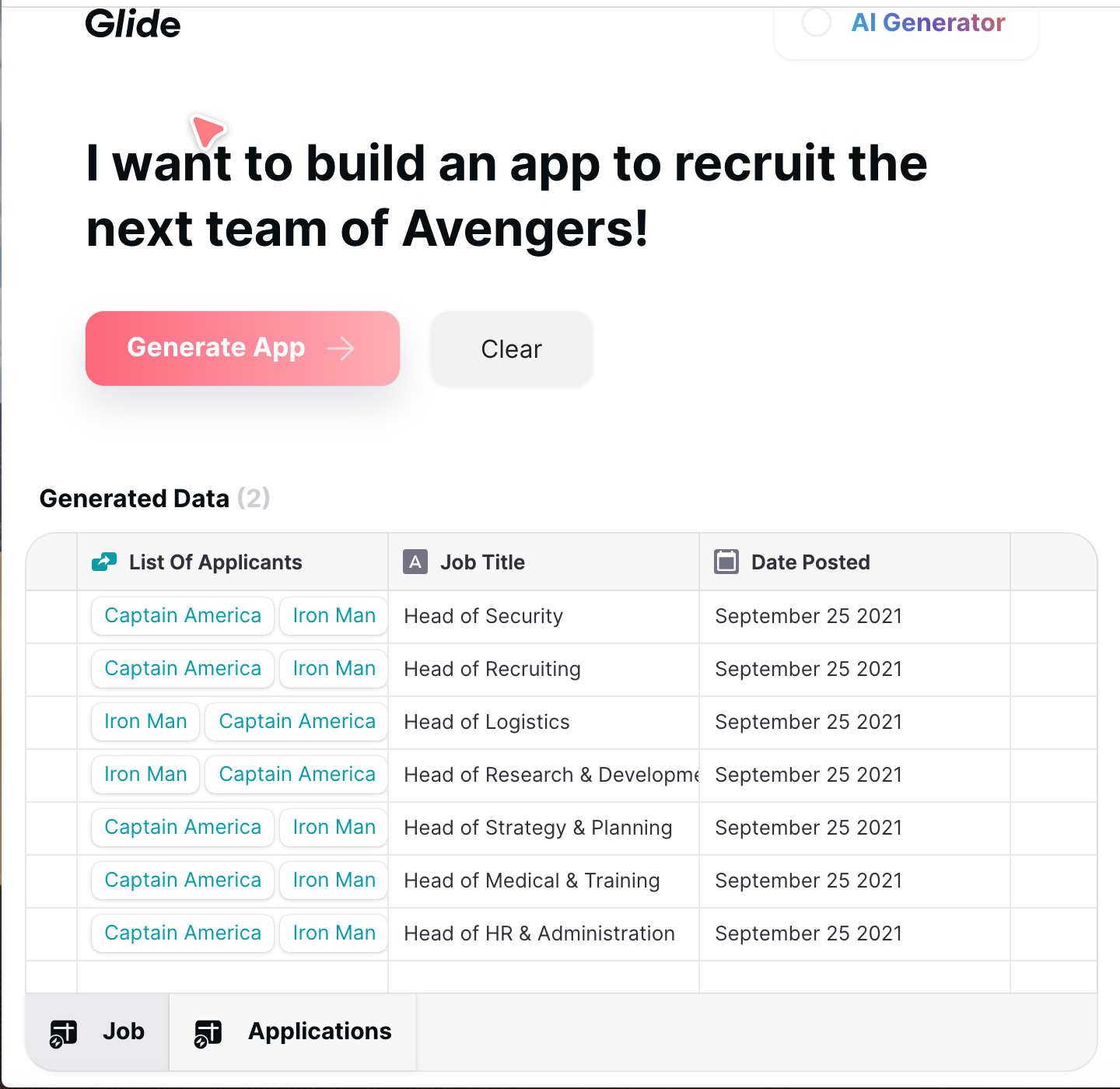

Bonus: some of our favorite completions

Here's a very short, incomplete collection of some our favorite completions!