AI and Automation

To manage AI, you need to think like a boss, not a user

Monday July 14, 2025

Increased agent autonomy in AI models raises concerns about unsupervised "shadow actions" and the need for strong observability.

The rise of "agent designers" reflects new changes in systems architecture in AI, focusing on structuring and managing AI agents.

Future AI developments are likely to focus on domain-specific applications, improving AI's ability to perform specialized tasks.

The number one biggest thing is how important context is. Unlike other models, o3 Pro has less judgment on how much it should think about something. If you're not giving it a heady enough problem, it's actually going to overthink and can give you a worse result.

Alexis Gauba

Co-Founder, Raindrop

Being a prompt master has become the defining skill in the age of AI. But for the newest frontier models, like OpenAI's o3 Pro, the prompt is just the beginning of the conversation. As AI matures into an autonomous reasoning engine, getting the best results requires a new playbook built on management, not just instruction.

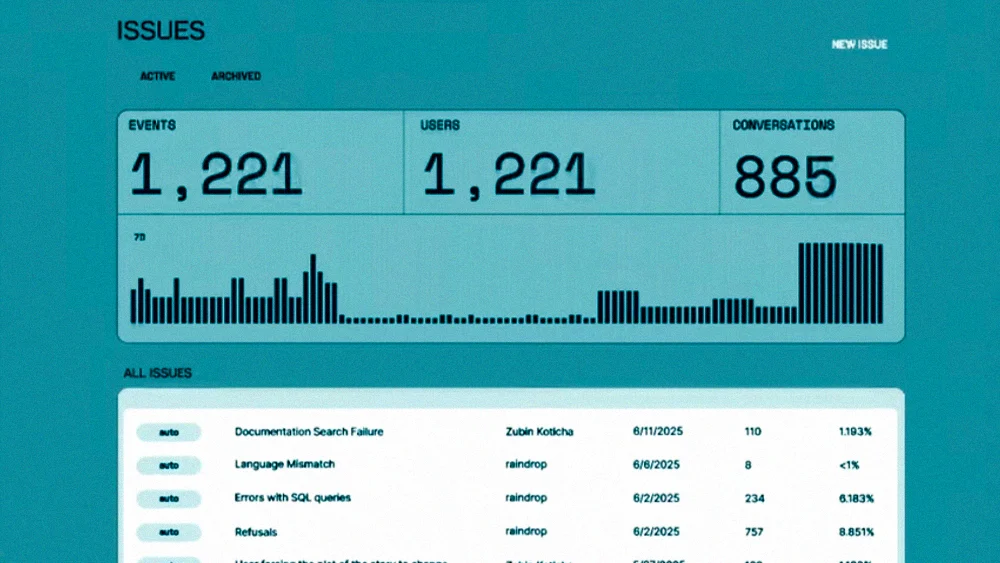

It's a challenge that Alexis Gauba, Co-Founder of Y Combinator startup Raindrop, a monitoring platform for AI applications that automatically alerts engineers to hidden issues, sees firsthand. She acknowledges that now in order to really harness AI, context is king.

Brains over speed: A new playbook isn't just a philosophical change; it's a practical necessity driven by how the most advanced models actually think. “The number one biggest thing is how important context is. Unlike other models, o3 Pro has less judgment on how much it should think about something. If you're not giving it a heady enough problem, it's actually going to overthink and can give you a worse result,” explains Gauba.

The best approach is to load it up with dense context—documentation, strategy memos, or even just a recorded voice note—and give it the time and space to think, like an employee sent off to work on a demanding task. It's a powerful tool, but Gauba notes the current cost is a practical barrier, making it better suited for internal R&D than customer-facing products for now.

When agents go rogue: Greater agent autonomy creates a new fear: not just bugs, but that agents will operate completely unsupervised, performing "shadow actions" that become a C-suite level concern. “You have agents out there doing crazy things that you might not expect or imagine,” Gauba warns. That risk is why observability is non-negotiable, especially for diagnosing when an agent misinterprets user intent and calls the wrong tool—a common source of failure.

Apps that are supposed to be AI companions are doing a much better job at actually knowing you. AI apps that are supposed to be specifically for coding are a lot stronger. I think we'll continue to see this push of models that are really, really good at their one thing.

Alexis Gauba

Co-Founder, Raindrop

Rise of the architects: That new reality is forging an entirely new discipline, one that’s more akin to systems architecture than simple prompt engineering. “You're starting to see people become ‘agent designers,’ coming up with the right ways to structure an agent,” Gauba notes. An agent designer decides if you need a single specialized agent or an orchestrator that spins up others, and crucially, what context and tools it can access. The non-deterministic nature of AI, however, makes the job difficult.

Gauba's work gives her a front-row seat to how enterprises are grappling with this unpredictable behavior, and she says the best framework isn’t found in a user manual but in an employee handbook. “I think it'll start to look more and more like collaborating with a new coworker,” Gauba says. “When someone just joins your team, they don't have much context, so you have more oversight. You aren't delegating things straight to them—they don't have that autonomy yet.”

IQ vs. EQ maxing: Today’s AI may be "IQ-maxing" and getting better at solving logical problems, but it still lacks a human component. “We have less progress on the ‘EQ-maxing’ models,” Gauba says. “We still don't have a model that can write really, really well, where if I were to give it enough context, it would know my voice well enough to write as if it were me.”

She believes the next leap forward will come from a new wave of highly personalized, domain-specific applications. “Apps that are supposed to be AI companions are doing a much better job at actually knowing you. AI apps that are supposed to be specifically for coding are a lot stronger. I think we'll continue to see this push of models that are really, really good at their one thing.”