Data and Infrastructure

Data privacy is a CX trust fall, where ethical organizations win long-term

Monday May 12, 2025

Knowledge CEO Chris Anzalone stresses transparency in AI data practices to foster trust, calling for bold, clear disclaimers on data use.

Ethical use of collected data is a priority, despite its widespread collection.

Genuine personalization wins over superficial data cues in customer interactions.

Just be honest and tell people what you want to do and why you want to do it. They’re much more inclined to say, ‘Yeah, you know what, I’m okay with that.’

Chris Anzalone

Founder and CEO, Knowledge

AI has a hand in nearly every CX touchpoint, but the ethics are still questionable. With massive data collection comes privacy concerns, and it becomes trust, not personalization, that really sets a business apart.

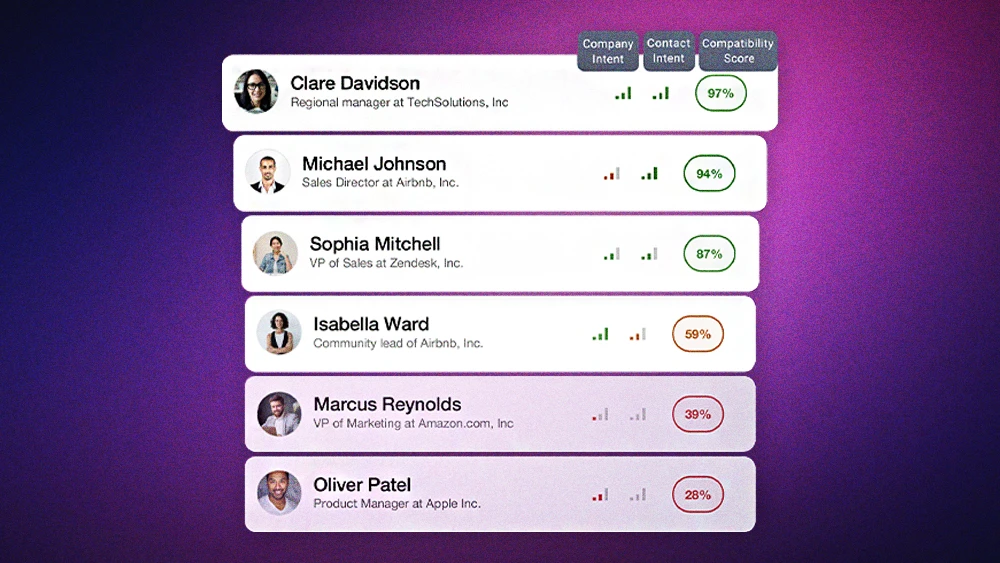

Chris Anzalone is the Founder and CEO of Knowledge, a platform providing person-level intent data to sales teams. He joined us to discuss taking a pragmatic approach to AI, balancing innovation with ethics, trust, and transparency.

Transparency over tactics: For Anzalone, building user trust starts with radical transparency—a sharp break from the industry’s habit of burying intentions in fine print. “Just be honest and tell people what you want to do and why you want to do it,” he says. “They’re much more inclined to say, ‘Yeah, you know what, I’m okay with that.’”

He contrasts this with shady, backlash-inducing moves—like quietly copying entire CRMs without clear consent—and argues that candor will win in the long run.

Be bold: Knowledge embodies this transparency in its own approach to user data. "Building trust for us is more centered around having a big, bold disclaimer in a way that's obvious we're being bold, and not trying to hide through terms of service," Anzalone notes. “It needs to be very clear, not fine print but bold letters."

No buried clauses, no ambiguity—just a direct statement that user data helps improve the product, not fuel someone else’s. “It will just make for a better experience for you,” he continues, emphasizing that users should not only understand what they’re agreeing to, but also have a real choice. “Give them a route to use your tool even if they don’t want that data share.”

As much as it sometimes feels invasive, it's usually beneficial. The privacy absolutist is the losing side of that argument

Chris Anzalone

Founder and CEO, Knowledge

Enhanced experience: Anzalone acknowledges the pervasive nature of data collection but points to its tangible benefits in everyday life. "As much as it sometimes feels invasive, it's usually beneficial," he states. "It makes for a better experience with the platform, and a better experience just in life."

He cites examples like Amazon's predictive recommendations or Google's UX optimizations, which, while data-intensive, demonstrably improve user experience and even business outcomes. "The privacy absolutist is the losing side of that argument."

The ethics factor: It's not the data itself, but how it's used. "We have a responsibility to not use that data, even though we have it," Anzalone says. "And I think that really lies on the ethics of the organizations and the leaders at those organizations to make those calls."

"There are a lot of ethical questions about how we utilize this data, but I don't think there's an ethical question around whether or not we collect this data," he says.

Dive deeper: Not all data is created equal. Referencing a social post isn’t the same as understanding a person's wants and needs, and pulling from an easy-access, shallow data pool is hardly a differentiator. “When everybody's doing it the exact same way, no one's doing anything,” Anzalone says. “There's zero differentiation. It's all just noise.”